An information security strategy, supported by a design that combines its own security infrastructure and a finely defined scheme of needs, key processes and risks, can improve your level of reliability and your peace on mind, this is why we will see the importance of Network Security Architectures

Organizations have long been more reactive than proactive in the field of computer security. The addition of security elements has sometimes been fashionable, other times due to an imminent need to solve a problem and in some cases due to an attack that already have caused a crisis.

In the same organization you can find different security technologies and architectures working together in a complex infrastructure. Are also very common budget cuts to the IT department, this situation makes harder keep this infrastructure up to date and running. Even going further makes their operability an impossible task.

For these reasons, system administrators must develop a strategy that allows them to evaluate among the different alternatives the one that best suits their needs and economy. But, where should we start?,

Here are some recommendations for Network Security Architectures:

-

Stick to the guidelines of security policies.

- Each decision must be supported and creditable.

- Proactive is better than reactive.

-

Respect the principle of least privilege.

-

Incorporate audit elements.

Choosing good security elements is a hard task, so let’s see the some known and proven options.

Network security tools

One-factor authentication: The most traditional method, network security will start with the user authentication, generally using username and password, but this method is very weak.

Two-factor authentication: Here we will have another element that should be verified, as a mobile phone, an USB key or some token.

Three-factor authentication: This method involves the biology of the user, such as a scan of the retina or fingerprint.

Firewall: Once the user has been verified, next step is ensure that protocols are followed. A firewall monitors and controls incoming and outgoing traffic between the internet and the network it guards, and imposes restrictions on what users can access within the network and where he can do it.

A firewall is not capable of making judgments on its own. Instead, it follows programmed rules. These rules dictate whether the firewall should let a packet through the network barrier. If a packet matches a pattern that indicates danger, the corresponding rule will instruct the firewall not to let the packet through. These rules have to be constantly updated because the criteria for what patterns indicate a dangerous packet change frequently.

Encryption: This more that a tool, is a process of encode communications between hosts in a way that only them can access the information. This method does not itself prevent interference but denies the intelligible content to an interceptor. It could provide these:

It could provide the following:

-

- Confidentiality encodes the message’s content.

- Authentication verifies the origin of a message.

- Integrity proves the contents of a message have not been changed since it was sent.

- Nonrepudiation prevents senders from denying they sent the encrypted message.

Network Segmentation: It’s a strategy that consists in dividing a network into subnets in order to increase the performance and ease the analysis and intervention. Network segmentation is a super effective strategy when it comes to containing threats to prevent their propagation within a system.

Network segmentation can also ensure that people only have access to the resources they need. This is made assigning authorization levels to each subnet, required for the access.

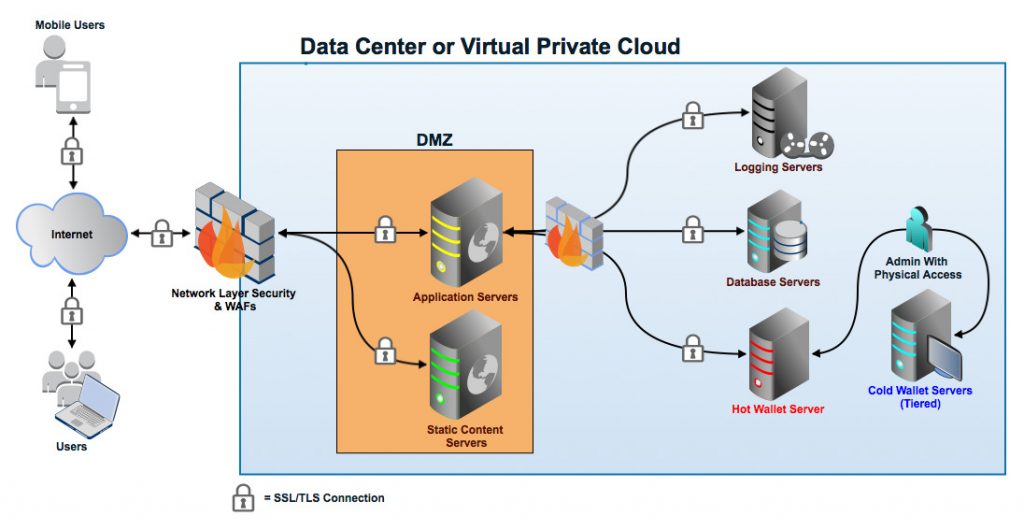

We can be sure about what we are doing with our internal infrastructure and our devices, but what happened when a user need to connect his own devices to our network, we cannot consider those devices secure. So we need to thin on a way to let them reach their services without put on risk our whole infrastructure and services. Let’s see a couple options to get this:

Virtual Private Network (VPN)

Is a secure tunnel to connect your device to a private network over a less secure network, such as the public internet. A VPN uses tunneling protocols to encrypt data at the sending end and decrypt it at the receiving end. To provide additional security, the originating and receiving network addresses are also encrypted.

This means that the connected user will be able to access to the services and applications that reside in the private network. Obviously with the same restrictions that this user has into this private network.

VPN protocols ensure an appropriate level of security to connected systems when the underlying network infrastructure alone cannot provide it. There are several different protocols used to secure and encrypt users and corporate data. They include:

IP security (IPsec)

Secure Sockets Layer (SSL) and Transport Layer Security (TLS)

Point-To-Point Tunneling Protocol (PPTP)

Layer 2 Tunneling Protocol (L2TP)

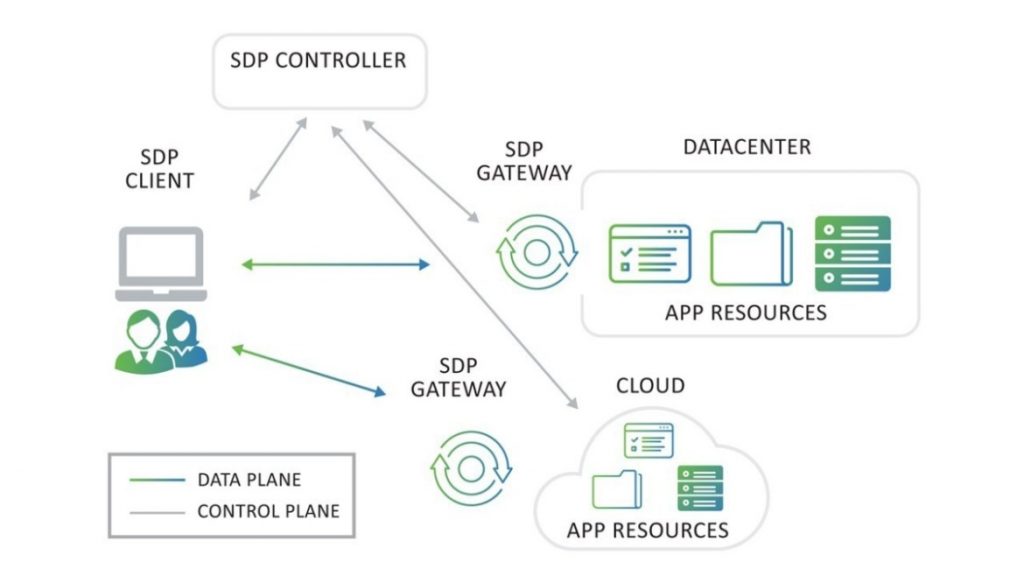

Software Defined Perimeter (SDP)

Users require access to the resources in various cloud services regardless of where the resources are located. Objectively, the users do not and should not care where the applications are located, they just require access to the application. Also, the increased use of mobile devices demands anytime and anywhere access.

So, a new approach is needed, one that enables the application owners to protect the infrastructure located in a public or private cloud and on-premise data center. This new network architecture is known as software-defined perimeter, also known as “Black Cloud”. Its key focus is to create a security system that can help prevent attacks on cloud systems.

Back in 2013, Cloud Security Alliance (CSA) launched the SDP initiative, a project designed to develop the architecture for creating more robust networks. SDP is an extension to zero trust which removes the implicit trust from the network.

SDP relies on two major pillars and these are the authentication and authorization stages. SDPs require endpoints to authenticate and be authorized first before obtaining network access to the protected entities. Then, encrypted connections are created in real-time between the requesting systems and application infrastructure.

Within a zero trust network, privilege is more dynamic than it would be in traditional networks since it uses many different attributes of activity to determine the trust score. However, access to the application should be based on other parameters such as who the user is, the judgment of the security stance of the device, followed by some continuous assessment of the session. Rationally, only then should access be permitted.

Authenticating and authorizing the users and their devices before even allowing a single packet to reach the target service, enforces what’s known as least privilege at the network layer. Essentially, the concept of least privilege is for an entity to be granted only the minimum privileges that it needs to get its work done.

Connectivity is based on a need-to-know model. Under this model, no DNS information, internal IP addresses or visible ports of internal network infrastructure are transmitted. As a result, SDP isolates any concerns about the network and application. This is the reason why SDP is considered as “The dark network”.

Access is granted directly between the users and their devices to the application and resource, regardless of the underlying network infrastructure.

Additionally, we must have in mind that a security scheme is as robust as its weakest link, and that the task of strengthening the security architecture is a priority.

We must continue to inform and maintain a self-critical sense in order to be ready to make decisions and make the most appropriate adjustments for Network Security Architectures.